In my discussions with product design teams, they often talk about the ineffectiveness of centralized user research groups and the need to create business impact. The main issues are the time required to obtain findings and the practicality of the research in a data informed design process. Many are frustrated.

I don’t blame them.

We use a user research approach that pairs designers and advocates to conduct fast, iterative research vital for making weekly design decisions. The learnings compound, and the benefits to the business metrics are undeniable.

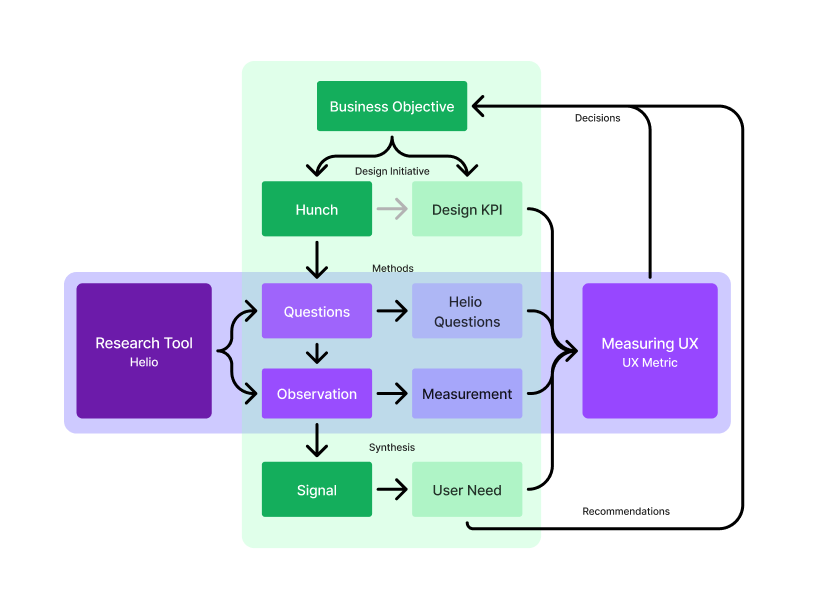

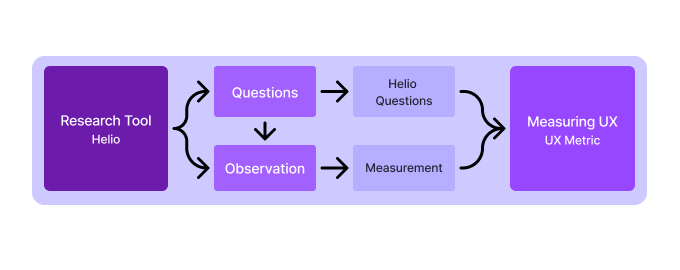

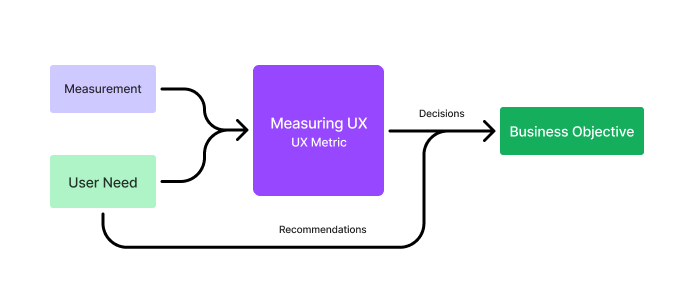

Helio helps teams use data-informed design to develop products and services that achieve business goals and deliver great user experiences. This method connects user feedback to actionable insights, offering measurements and comparisons that lead to better design decisions and align design efforts with business goals.

By incorporating UX metrics into the design process, you ensure your designs are visually appealing, highly functional, and user-focused. Whether aiming to boost user satisfaction, simplify navigation, or raise conversion rates, data-informed design provides a strong foundation for making decisions that lead to success.

What is Data-Informed Design?

Data-informed design is the practice of using data to guide and support design decisions. Unlike a purely data-driven approach, which depends entirely on numbers, data-informed design combines data insights with designers’ intuition and creativity. This approach ensures that designs are grounded in reliable data while benefiting from creative input, leading to more well-rounded and practical solutions.

A UX metric framework offers a clear understanding of user behavior, preferences, and challenges, helping to create user-friendly designs that are aligned with business goals. Using data to guide design choices, companies can develop products that connect with their users, increasing satisfaction and driving business success.

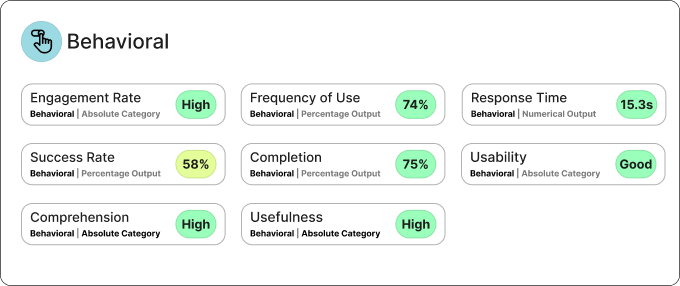

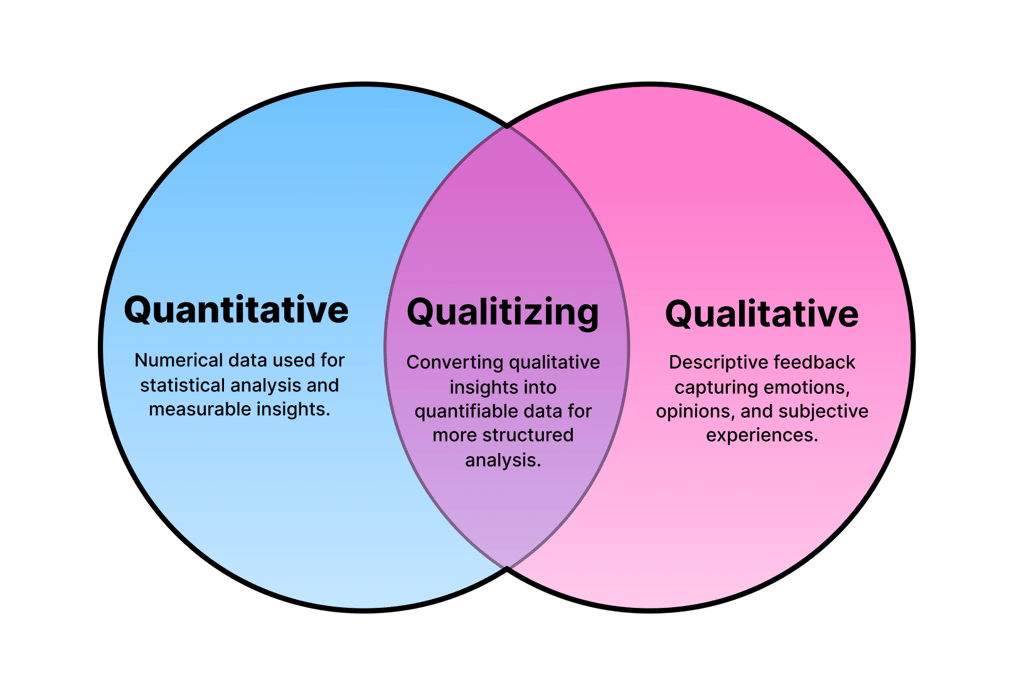

Data-informed design uses both quantitative and qualitative data to improve the design process. The behavioral UX metrics collected provide an overall rating based on either qualitative evaluation or quantitative results.

Quantitative data, like analytics and metrics, gives us clear, objective insights into user behavior. For example, you can see how people use your website, find out where they lose interest, and measure how well different design elements perform. This kind of data helps us understand what works and what doesn’t in numbers.

Qualitative data, on the other hand, gives insights into user experiences and feelings. Techniques like user interviews, surveys, and usability testing help uncover why users act as they do. These insights help us understand user motivations, preferences, and challenges, leading to more empathetic and user-focused design decisions.

Mixed Method User Research Analysis

Qualitizing combines quantitative and qualitative data to get a fuller picture of user behavior and experiences. While numbers tell us what’s happening, understanding the reasons behind those numbers adds depth to our analysis. By blending data-driven insights with user feedback, we can see how users interact with a product and why they make confident choices. This approach helps us create designs that are effective and resonate on a deeper, more personal level with users.

By balancing these two data types using Helio, designers can create more effective and user-friendly products. For example, suppose quantitative data shows a high drop-off rate on a particular page. In that case, qualitative data can help uncover the reasons behind it, such as confusing navigation or unappealing design. Designers can use this combined insight to make targeted improvements that enhance the user experience.

Continuously Measure and Analyze UX metrics

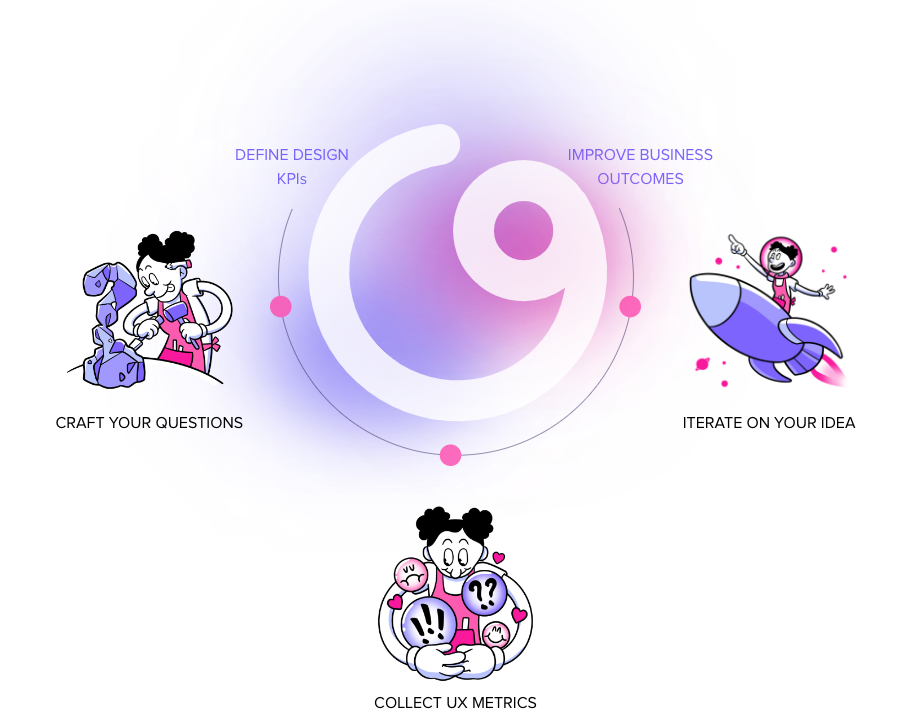

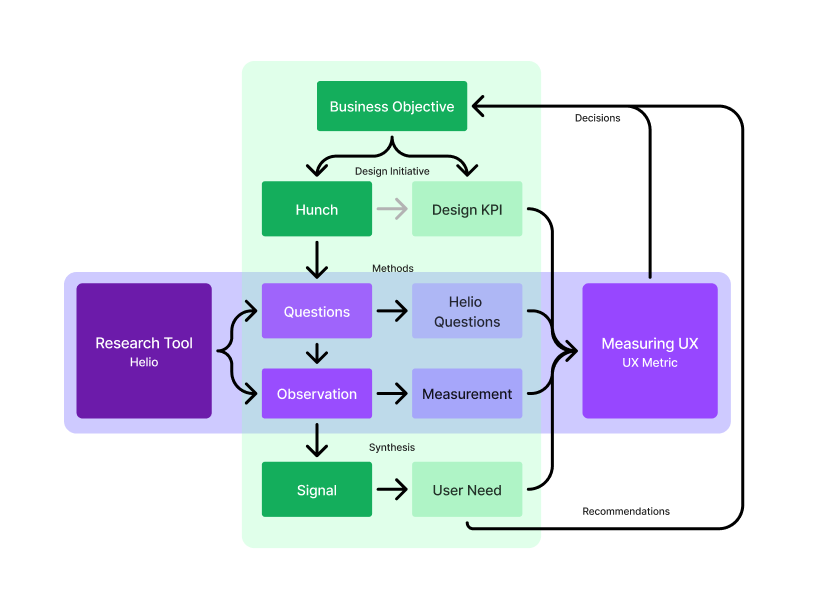

Data-informed design uses data to guide and refine design choices, helping set and measure key performance indicators (Design KPIs) like task completion rates, user satisfaction, and time on task. These metrics give designers clear goals aligned with business objectives, enabling them to track the effectiveness of their designs and make data-driven improvements.

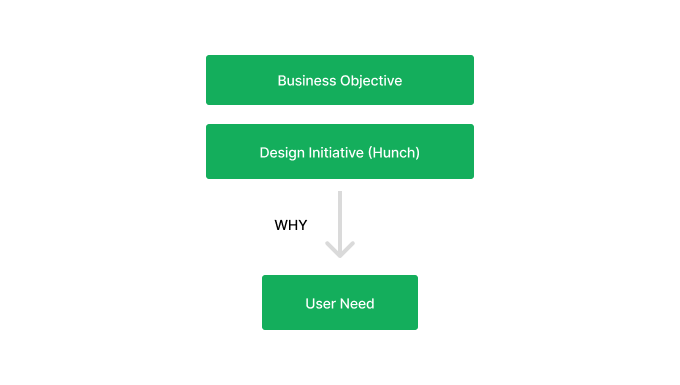

The process starts by defining business goals and aligning them with design objectives. Designers then create hypotheses based on initial data to guide their work. They collect and analyze data throughout the design process to validate decisions and make necessary adjustments.

For example, designers can gather user feedback using tools like Helio to understand user needs and preferences. This feedback informs design choices, allowing for continuous improvement. By regularly measuring and analyzing UX metrics, designers ensure the design remains aligned with user needs and business goals.

Helio Framework for Data-Informed Design

You need a solid framework to harness the power of data-informed design. This framework helps ensure that your design decisions align with business objectives and are driven by data and creativity. Here’s how you can structure your approach to data-informed design in weekly cycles.

Business Objective Alignment

Every successful design project starts with a clear understanding of the business objectives. You need to know what the organization aims to achieve with the design. Are you looking to increase user engagement, boost conversion rates, or improve user satisfaction?

Defining these objectives upfront provides a clear direction for your design efforts. It ensures your design decisions are aesthetically pleasing and strategically aligned with the company’s goals. Many businesses jump right into defining what design initiatives need to happen based on business goals, without clearly understanding the user needs.

For example, if the business aims to increase user retention, your design choices will focus on creating a seamless and engaging user experience that encourages repeat visits. This could involve optimizing the onboarding process, simplifying navigation, and enhancing the site’s or app’s overall usability.

Building a Data-Informed Design Approach.

Once you’ve set your business objectives, it’s time to explore the frameworks and methods that will guide your design process. Many companies get stuck at this point, falling into the “build trap”—assuming they can jump straight into production without fully understanding the user’s needs.

To avoid this, here’s a step-by-step approach to help your team understand the reasons behind investing time in designing a clear direction.

Create Your Hunches

Start with a hypothesis or educated guess about what might improve the user experience. This initial hunch often comes from experience, intuition, or preliminary observations. It’s the starting point for your design exploration and will define what the design initiative looks like.

A design initiative refers to a specific project or set of activities aimed at achieving particular design goals, such as launching a new feature or improving user experience. It is typically aligned with business objectives and measured through relevant KPIs

For instance, we worked with Indiana University to redesign their online website experience, combining content from eight current sites into one centralized web experience.

Design initiative example: Indiana University has a mandate to increase their application conversion rates by 3x before the year 2030.

The team started with an opportunity prioritization exercise, in which stakeholder feedback and user data was used to determine the highest value areas of the site to consolidate and improve. This exercise revealed the Apply page as an area where great improvement was needed on the site:

Hunch: Adding content to the current apply page and turning it into a How to Apply page will give users the confidence they need to jump into the application flow.

Use Available Data to Define Your Design KPIs

Start by gathering initial data to shape your hypotheses. This could include analyzing existing user analytics, conducting preliminary user interviews, or reviewing feedback from customer support. This information lays the groundwork for your hypotheses and helps you identify which measurements are key to defining your Design KPIs.

A Design KPI (Key Performance Indicator) measures how well a design meets specific goals related to user experience and business outcomes. Examples include usability rates, task completion rates, and the time it takes to complete important tasks. These KPIs are usually tracked through usability testing and user feedback and refined by standardizing UX metrics.

For instance, in our project with Indiana University, the team noticed that their application conversions were falling short. They set a goal to triple this number by 2030. Despite significant resources being invested to drive potential students to the website, the site was disorganized, with over 300 pages spread across 8 different domains. With 87% of traffic coming from mobile devices, it was clear where improvement efforts should be focused.

Using this initial data, we examined the flow leading to the application process and identified the Apply page as a key area needing significant improvement.

Choosing Design KPIs

Establish specific, measurable, and achievable KPIs for design. These KPIs provide clear targets for your design efforts and help you measure success. Common design KPIs include:

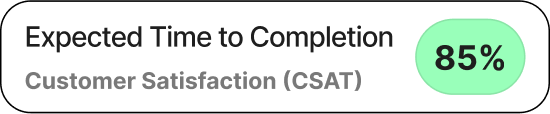

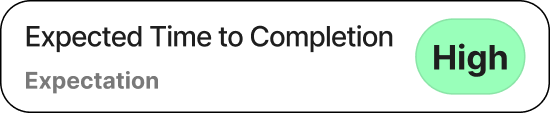

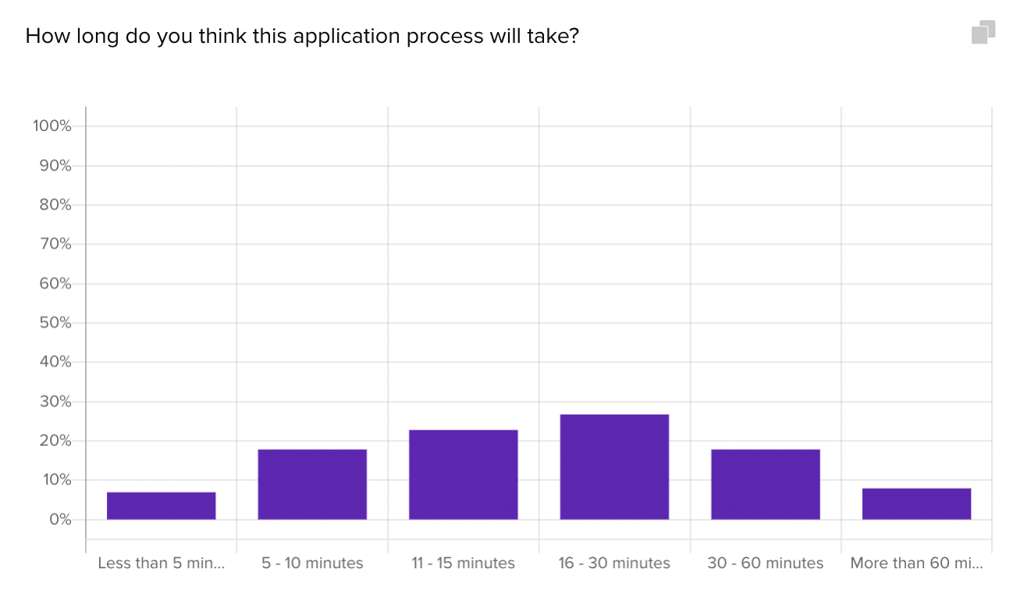

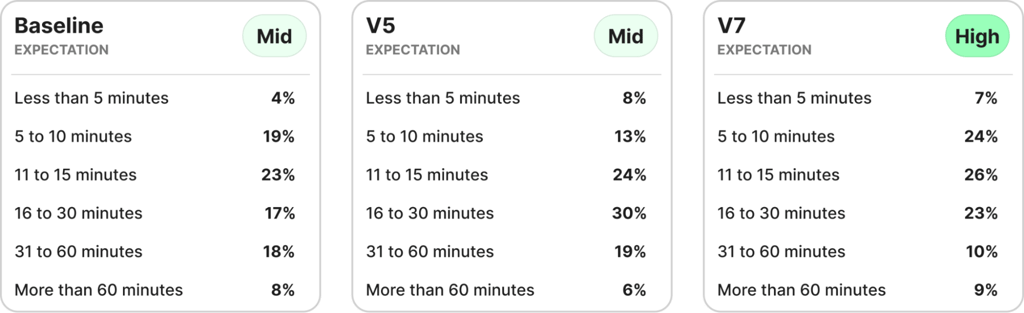

Expected Time to Completion: In this example, we focused on the user’s expected time to completion as the Design KPI to measure users willingness to start the application process. We used Customer Satisfaction (CSAT) and Expectations as metrics to measure these expectations and alignment.

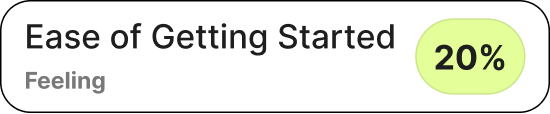

Ease of Getting Started: Understanding users’ emotions when getting started helps ensure we’re empowering users before they engage in a new flow.

Setting these KPIs ensures that your design efforts are focused on tangible, measurable outcomes. For instance, if you’re redesigning a website to improve user engagement, you might set a KPI to reduce the average time to complete a task by 20%.

Putting All the Design Pieces Together

Let’s put this framework into a practical scenario to define these sections.

We worked with Indiana University’s online team to redesign their student website and aggregate content across several different domains that currently existed:

- Business Objective: The primary objective of this project is to redesign and consolidate the IU Online student-facing websites and increase conversions tied to new and current enrollments and student success in IU online courses and programs. The team had established a goal to raise that number by 3x before the year 2030

- Design initiative: Redesign the application page to increase user comprehension and willingness to start the application process.

- Hunch: Adding content to the page such as how-to steps, campus imagery, and student testimonials will increase application conversions.

A large amount of resources is being used to drive potential students to the website, but the site is currently incoherent, with 300+ pages spread across 8 different domains. The current traffic to the site is 87% mobile/13% desktop, which provides guidance on where to direct improvement efforts.

- Design KPIs

Increase application views, and improve engagement on the application flow.

Here are the UX Metrics that were defined to meet the Design KPI criteria:

- Customer Satisfaction (CSAT)

- Expectations

- Feeling

With these KPIs set, we started refining the design, concentrating on updating brand imagery and adding more content to the page. We used our hunches to create questions that would help us gather the right observations. This process is repeated weekly across 40 different design initiatives.

Creating Baselines

We tested the baseline against the newest iterations, and used the data to continue iterating until we reached a Sentiment and Reaction score that the team could be proud of.

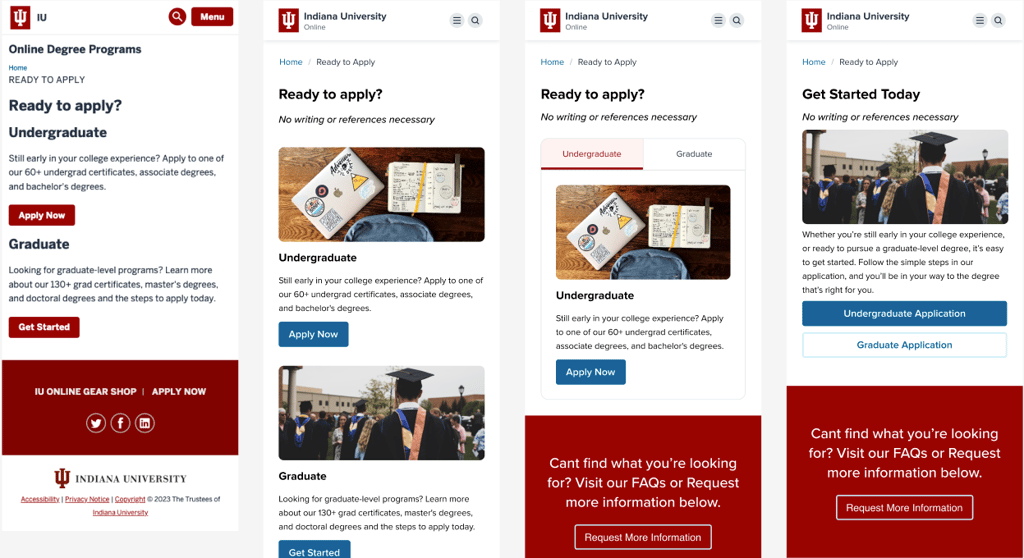

With vast improvements needed, the team began by throwing outhunches regarding what they think would work well on the page. Concepts such as adding imagery to the page, using a tabbed structure to separate primary actions, and including a numbered list of steps:

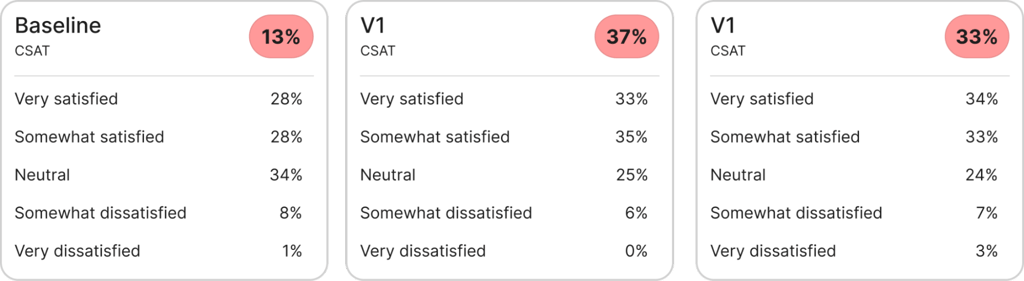

To determine whether the choices we had provided across these page variations were effective, we sent each version of the Apply page to 100 participants in an audience of Current and Prospective College Students (US).

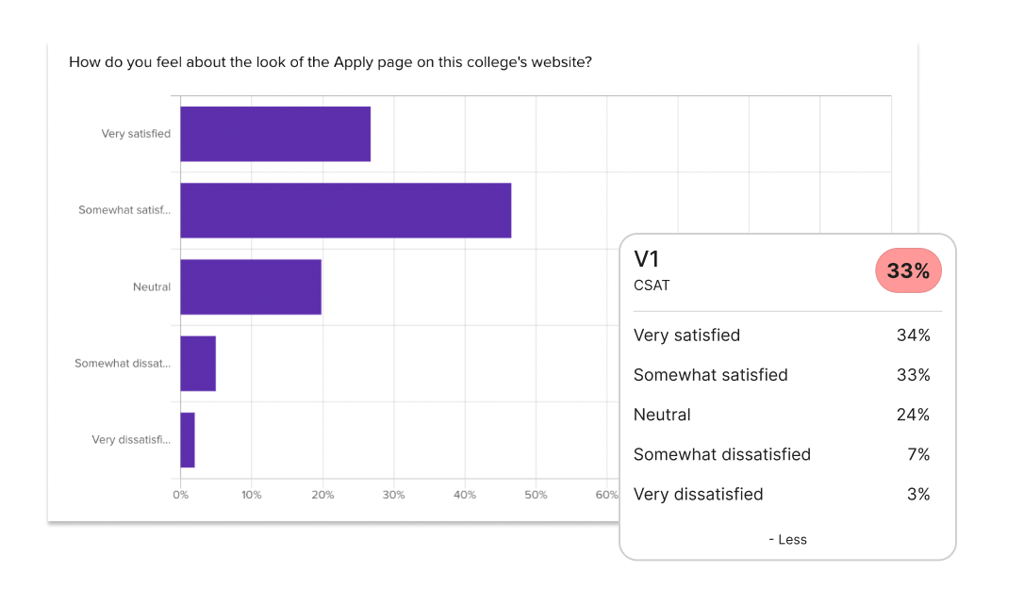

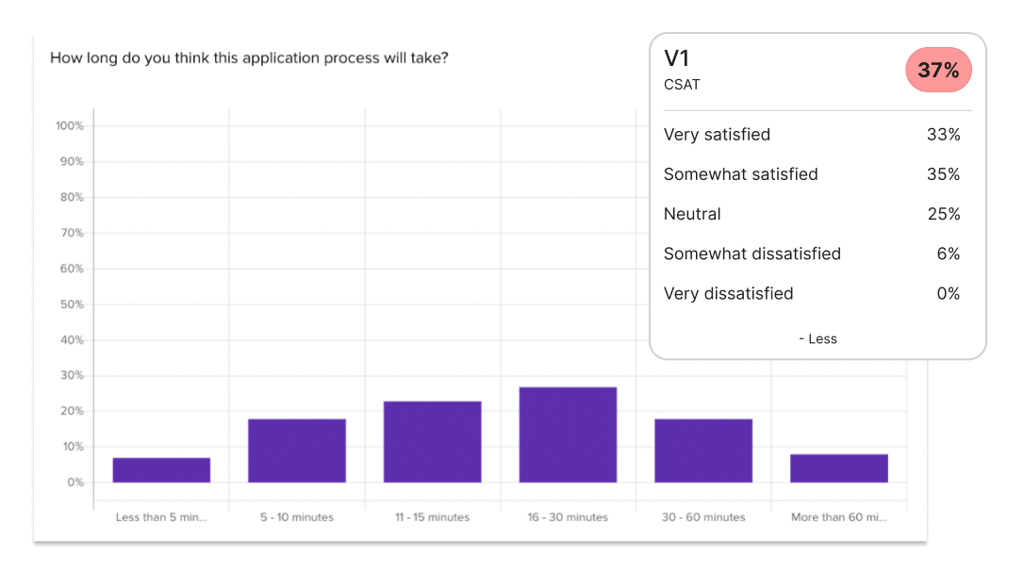

For each version of the page, we asked participants to interact with the design by clicking through it and then answer some questions. These questions focused on their overall satisfaction with the page’s appearance, how long they thought the application would take based on the information provided, and how easy they expected the application process to be.

We sent out the tests to collect responses overnight and returned the next day to review the data and share our findings with the IU team.

We quickly noticed that the visual updates to the page, like adding images and changing brand colors, increased overall satisfaction by up to 24%. However, the written reactions from participants showed that something was still missing.

What do you expect to see when you click Get Started?

“A page explaining the next steps to take to pursue the degree. Explaining the process.” – Undergraduate Student (US)

Student feedback like this suggested that we needed to add more details to the Apply page so participants would feel ready to start the application process without needing extra information.

Based on these initial findings, the team added more options for users, like numbered steps for applying and a student testimonial.

Each of these new versions clearly increased the satisfaction with the look of the page, validating that they new content we offered to visitors makes a significant impact on the page:

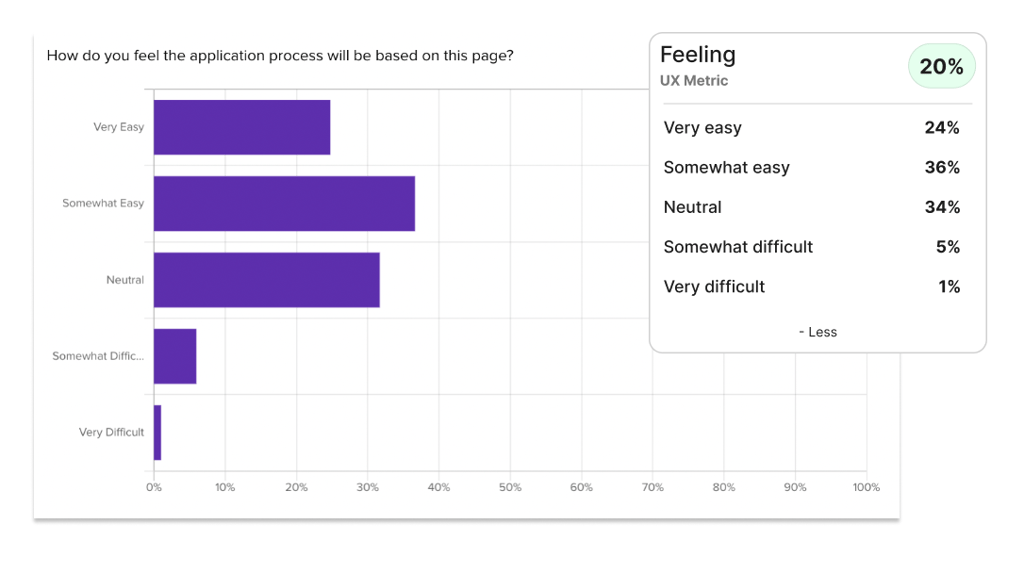

A clear sign of success: the expected time to complete the application dropped from 30 minutes to just 15 minutes in the latest version, which included a student testimonial.

All of this was achieved in just one week, leaving the Indiana University team confident that their new Apply page would engage and convert users at a higher rate.

By aligning your design efforts with business goals and using data to guide your decisions, you can create a more effective, user-friendly design that meets both user needs and business objectives. This data-informed approach not only enhances the user experience but also drives measurable business results, demonstrating the value of design in achieving strategic goals.

The framework for data-informed design starts with aligning your efforts with business objectives, forming a clear hypothesis, collecting and analyzing initial data, and setting measurable KPIs. This structured approach ensures that your designs are both creatively inspired and backed by data, leading to more successful and impactful outcomes.

Helio as a Research Tool

To successfully apply data-informed design, you need strong research tools and methods to gather and analyze data. Helio is a powerful tool that helps collect both qualitative and quantitative data about user preferences and behaviors. Here’s how to use Helio and other methods to enhance your design process.

Questions and Observation

Helio allows you to ask targeted questions and observe user interactions, essential for gathering qualitative data. You can dive deep into understanding user preferences, motivations, and pain points by posing specific questions. For instance, you can ask users about their experience with a particular feature or what improvements they want. Observing how users interact with your design in real time provides invaluable insights into usability issues and areas for improvement.

Imagine you’re redesigning a mobile app. Use Helio to create a set of questions to understand how users navigate the app. Observe their interactions to identify any friction points or confusing elements. This qualitative data helps you empathize with users and make informed design decisions.

Choosing the Right Helio Questions

Helio offers various types of questions to gather diverse insights. Multiple-choice questions can quickly highlight user preferences and issues, while open-ended questions provide more profound, qualitative feedback. Here’s how to utilize these effectively:

Likert Scale Question

Example: Indiana University asked for initial Reactions to their Apply page baseline using the CSAT research method.

UX Metric: Use these quantitative questions to gauge customer satisfaction (CSAT) with the design.

Multiple-Choice Questions

Example: A measurement of whether Indiana University’s Apply page was seeing improvement were participants’ expectations of how long the application process would take.

UX Metric: Use this question type for establishing Reactions in the form of expectations with a product or process.

Likert Scale Questions

Example: After reading through the information on the IU Apply page, participants were asked a final question about how they felt the process would be before answering.

UX Metric: Another way to use this question type, by asking for Feelings about a product after participants experience it.

Combining these question types gives you a comprehensive view of user needs and preferences, enabling you to make data-informed design decisions that address real user concerns.

Practical Application

Let’s look at a practical application of these research tools and methods. Suppose you’re tasked with redesigning an e-commerce website to improve user experience and increase sales.

Use Helio to Ask Questions and Observe:

- Initial Questions: Start by asking users about their current experience with the website. Questions like, “What do you find most challenging when shopping on our site?” can reveal common pain points.

- Observation: Observe how users navigate the site. Pay attention to where they hesitate or struggle, which provides clues on areas needing improvement.

Utilize Helio Questions:

- Multiple-Choice Questions: Ask users to rate their satisfaction with different aspects of the site, such as product search, checkout process, and overall usability.

- Open-Ended Questions: Encourage users to share any additional feedback or suggestions for improvement. Questions like, “What features would you like to see added?” can generate valuable insights.

Implement Measurement Techniques:

- A/B Testing: Test different homepage versions to see which layout enhances user engagement. For example, compare a minimalistic design with a more feature-rich one.

- User Testing: Conduct sessions where users complete specific tasks, such as finding a product and purchasing. Note any difficulties they encounter and gather their feedback on the process.

- Analytics Tracking: Monitor user behavior through analytics tools to track metrics like bounce rates, average session duration, and conversion rates. Use this data to identify trends and measure the impact of your design changes.

By consistently using these research tools and methods, you can create a design that not only looks good but also meets user needs and achieves business goals. The insights from Helio and other measurement techniques help you make informed decisions, leading to a more user-friendly and successful product.

This data is essential for data-informed design, helping you create designs that are both user-focused and aligned with business objectives. By asking the right questions, observing how users interact, and analyzing key metrics, you can keep improving your designs and deliver outstanding user experiences.

Measurement Techniques

Implementing various measurement techniques is crucial in validating your design decisions and ensuring they lead to desired outcomes. Here are some effective techniques you can use. Helio really shines when you need measurements before you build or launch designs.

- Pre-market: Pre-market remote user surveying involves gathering insights and feedback from potential users about a product or service before its launch. This helps to understand market needs and preferences. This process aids in refining the product and shaping its development to meet user expectations better.

- Post-market: Post-market analytics collects feedback from actual users after the product or service has been launched to assess their satisfaction and identify any issues. This information is crucial for improving, addressing problems, and enhancing future product versions.

Combining these measurement techniques lets you gather a wealth of data to inform your design decisions. For instance, start with A/B testing to identify the most effective design variations, then use user testing to gather qualitative feedback on those variations, and finally, track user behavior with analytics to monitor the long-term impact of your design changes.

Choosing the Right UX Metics

In addition to guiding design decisions, UX metrics provide a structured way to communicate the value of design work to stakeholders. By translating abstract user experiences into concrete data, these metrics make it easier to demonstrate how design improvements contribute to achieving business goals. This data-driven approach not only supports better decision-making but also builds trust in the design process.

One common design challenge is effectively using data. Many metrics and evaluations come from users outside a system and correlate with product and business KPIs.

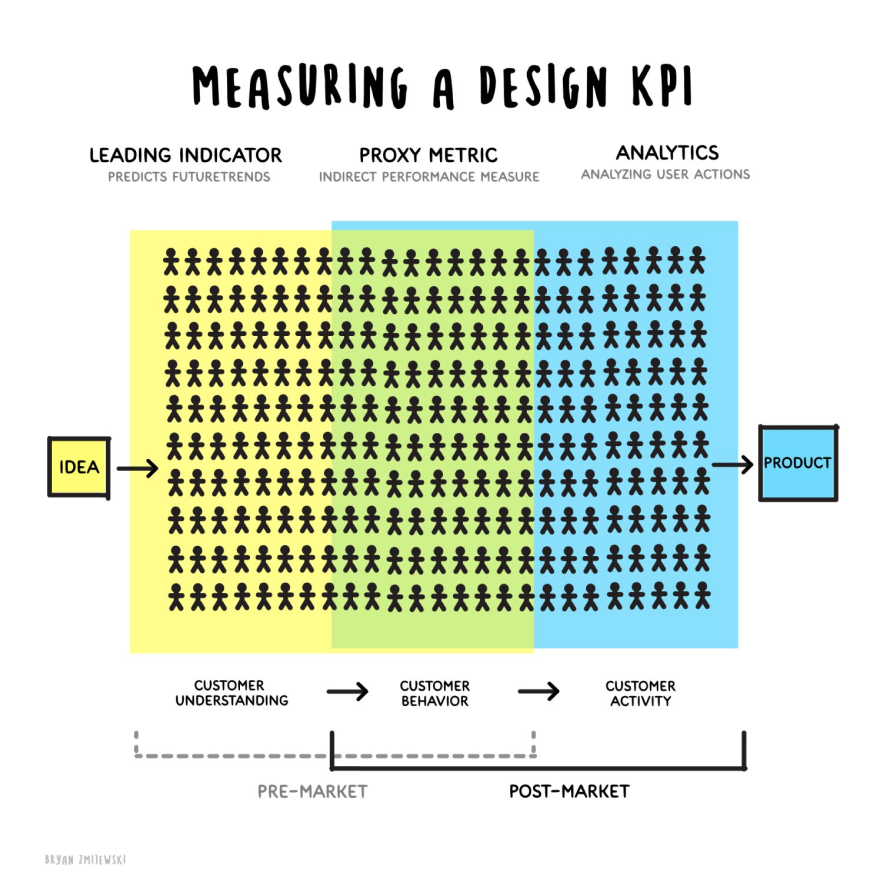

This can make it difficult for design teams to show and explain the value of their work because it adds an extra step in linking their efforts to results. A proxy metric and a leading indicator are used to evaluate performance and predict outcomes, but they have different purposes and characteristics. In design, they are helpful because most design work is conceptual and based on insights before it goes to production.

Leading Indicator

Predicts future performance from early signals or research. A leading indicator is a predictive metric that signals future performance or outcomes. In the context of design, it provides early signals, often derived from user research, that help predict the success of an idea or design before it is fully implemented.

Proxy Metric

Indirect measure approximating user actions when direct metrics aren’t feasible. A proxy metric is an indirect measurement that approximates or represents a user action when direct measurement is difficult or impractical. It serves as an intermediary to track progress and validate design decisions, particularly in cases where direct metrics are not feasible.

Analytics

Analyzes user interactions to evaluate product effectiveness. Analytics involves the detailed analysis of user actions to understand how customers interact with a product and to identify areas for improvement. Analytics often focus on quantitative data, such as click-through rates, session durations, and user retention rates, to assess the effectiveness of design and product features.

Type of UX measurements

UX Metrics reveal how users are engaging with a product or service. To create a well-rounded user experience, it’s important to consider both Behavioral and Attitudinal UX metrics.

Behavioral metrics, such as usability, engagement, and completion, focus on how users interact with the product and its effectiveness in meeting their needs. Attitudinal metrics, like sentiment, desirability, and NPS, provide insights into users’ emotions, satisfaction, and perceptions.

By combining these metrics, designers can ensure that products are both functional and emotionally resonant, driving user engagement and long-term success.

Behavioral Metrics

- USABILITY: Ensures users can easily and quickly use the product to do what they want.

- COMPREHENSION: Ensures users understand the product, how it works, and what it can do for them.

- ENGAGEMENT: Tracks how often and how long users interact with the product, showing their interest and involvement.

- RESPONSE TIME: Measures how quickly users respond, affecting user satisfaction and perceived performance.

- VIABILITY: Looks at whether the design is practical, sustainable, and fits with business goals for long-term success.

- COMPLETION: Measures how often users successfully finish tasks or reach goals, showing how effective the product is.

- FREQUENCY: Measures how often users return to the product or specific features, indicating loyalty and engagement.

- SUCCESS: Evaluates the rate at which users achieve their goals or complete key tasks within the product.

Attitudinal Metrics

- SENTIMENT: Collects overall feelings and attitudes about the product to understand user satisfaction and loyalty.

- FEELING: Describes users’ emotions when using the product, which affects the experience and willingness to stick around.

- DESIRABILITY: Checks how attractive and appealing the product is to users, affecting their initial and ongoing interest.

- REACTION: Captures users’ immediate emotional responses, providing quick insights into their first impressions and perceptions.

- USEFULNESS: Assesses how helpful users find the product in achieving their goals, reflecting the perceived value.

- NPS (Net Promoter Score): Measures how likely users are to recommend the product to others, indicating satisfaction and loyalty.

- CSAT (Customer Satisfaction): Measures overall customer satisfaction with the product, giving direct feedback on its performance.

- BRAND SCORE: Evaluates users’ perception of the brand associated with the product, reflecting brand strength and alignment with user values.

- POST-TASK SATISFACTION: Measures how satisfied users feel immediately after completing a task, providing immediate feedback on specific interactions.

This categorization helps in focusing on both the behaviors that drive user interaction and the attitudes that shape user experience and loyalty.

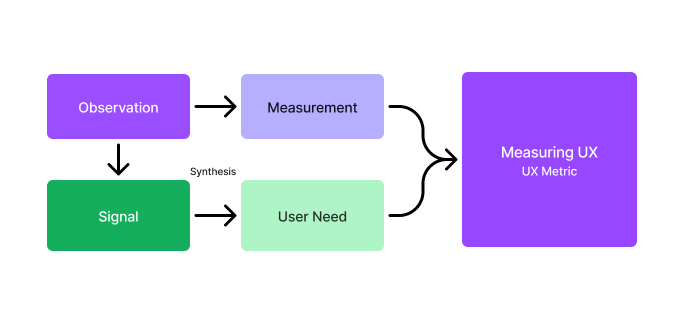

Synthesizing Metrics into Design

Translate data signals into precise user needs. This involves identifying patterns and trends in the data that indicate user preferences and pain points. Prioritize user needs based on their impact on business objectives and the frequency of the issues identified. This ensures that the most critical user needs are addressed first.

Making Data-Informed Decisions

Making decisions based on a combination of quantitative and qualitative data sets your projects up for success. This approach ensures that your designs are user-centric and aligned with business goals. Let’s explore how to synthesize data effectively and examine some real-world case studies showcasing the power of data-informed design.

Combining quantitative and qualitative data is key to data-informed design, offering a full view of user behavior and preferences.

Quantitative Data: Metrics like page views and conversion rates tell you what’s happening on your site or app. For example, a high drop-off rate in checkout indicates a problem, but it doesn’t explain why.

Qualitative Data: Insights from user surveys, and usability tests reveal the reasons behind user actions. For instance, users might find a step in checkout confusing, explaining the drop-off.

Combining Data for Decisions: Start with key metrics from quantitative data, then use qualitative insights to understand and address the issues, leading to more effective design decisions

Indiana University Design Decisions

- Add imagery to Apply page: The baseline apply page included no imagery whatsoever, and therefore produced very low Satisfaction (CSAT) and Expectations for the application process. Adding imagery to the page immediately improved CSAT, and allowed the team to introduce their new brand styles to the page.

- Add how-to steps to Apply page: A key goal for the Apply page was to reduce the time-expectation of participants before they’ve entered the application process. Providing five how-to steps on the page helped bolster our improved satisfaction and bolster more clear expectations for the application.

- Add student testimonial to Apply page: The team had a hunch that student testimonial might work well to convince participants of the ease of completing the application process. With the addition of the student quote speaking to how quick the application is to complete, we saw our first significant improvement in Expectations for the time requirement, while maintaining the improved Customer Satisfaction and Feeling scores that we had achieved with earlier versions.

Recommendations and Best Practices for Data-Informed Design

To successfully integrate data into your design process, follow these best practices:

- Start with Clear Objectives: Define every project’s business and design objectives. Knowing your aim helps guide your data collection and analysis efforts.

- Use the Right Tools: Leverage tools like Helio to gather qualitative and quantitative data. These tools provide a comprehensive view of user behavior and preferences, essential for making informed design decisions.

- Balance Quantitative and Qualitative Data: Quantitative data gives you the numbers, while qualitative data provides the context. Combine these data types to understand user needs and behaviors fully. For example, analytics can be used to identify a drop-off point and user feedback to understand why it happens.

- Set Measurable KPIs: Establish specific, measurable, and achievable KPIs for your design projects. Common KPIs include task completion rates, user satisfaction scores, and time on task. These metrics help you track progress and measure the impact of your design changes.

- Iterate and Test: Design is an iterative process. Use concept testing to compare design variations and continuously refine your designs based on user feedback and data insights. Regular testing helps ensure that your designs remain effective and user-friendly.

- Involve Stakeholders Early: Engage stakeholders early in the design process to align on objectives and expectations. Regularly share data insights and progress to keep everyone on the same page.

- Document and Share Findings: Document your data collection methods, findings, and design decisions. Sharing this documentation with your team ensures transparency and helps inform future projects.

Common Pitfalls and How to Avoid Them

While data-informed design offers many benefits, it’s essential to be aware of common pitfalls and how to avoid them:

- Over-Reliance on Data: Relying solely on data can stifle creativity and lead to designs that lack innovation. Remember to balance data insights with designer intuition and creativity. Use data to inform, not dictate, your design decisions.

- Ignoring Qualitative Insights: Focusing only on quantitative data can overlook the deeper insights qualitative feedback provides. Consider the context behind the numbers to fully understand user behavior and preferences.

- Failing to Set Clear Objectives: Without clear objectives, your data collection efforts can become unfocused and ineffective. Always start with well-defined goals to guide your design process.

- Skipping Iteration: Design is an iterative process. Skipping iteration and testing can result in designs that don’t fully meet user needs. Regularly test and refine your designs based on data and user feedback.

- Not Engaging Stakeholders: Keeping stakeholders in the loop can lead to misaligned expectations and goals. Engage stakeholders early and regularly to ensure alignment and support throughout the project.

- Misinterpreting Data: Misinterpreting data can lead to incorrect conclusions and poor design decisions. Ensure you understand the data you’re working with and consider multiple perspectives to avoid biases.

Balancing Quantitative and Qualitative data

Data-informed design combines the best of both worlds—empirical data and creative intuition—to create user-centric designs that achieve business goals. In weekly cycles teams can tackle five or so concepts in this approach.

By balancing quantitative and qualitative data, setting clear objectives, and following best practices, you can make informed design decisions that enhance user experience and drive success. Avoid common pitfalls by maintaining a balanced approach and engaging stakeholders.

Start incorporating data into your design processes by leveraging tools like Helio to gather comprehensive insights, and don’t shy away from combining numbers with creative intuition. For further reading and resources, explore articles on UX metrics, design KPIs, and data-informed design strategies. By embracing a data-informed approach, you can create designs that look great and deliver real, measurable value to your users and your business.

Incorporate these strategies and best practices into your next project to see the transformative power of data-informed design. Happy designing!

Data Informed Design FAQ

Data-informed design is the practice of using data to guide and support design decisions. Unlike a purely data-driven approach that relies solely on numbers, data-informed design combines data insights with designers’ intuition and creativity. This approach ensures that designs are grounded in reliable data while also benefiting from creative input, leading to more well-rounded and practical solutions.

Data-informed design improves user experience by using both quantitative and qualitative data to understand user behavior and preferences. This approach helps designers make informed decisions that align with user needs, ensuring that products are user-friendly, functional, and aligned with business goals. The result is a design that resonates with users, leading to increased satisfaction and better business outcomes.

Key metrics in data-informed design include usability, comprehension, engagement, response time, and completion rates. These metrics help track how effectively a design meets user needs and business objectives. By regularly measuring these metrics, designers can make data-driven improvements that enhance the user experience.

To start implementing data-informed design, begin by setting clear business objectives and aligning them with your design goals. Gather initial data through user analytics, surveys, or interviews to shape your hypotheses. Use this data to identify key performance indicators (KPIs) that will guide your design decisions and track progress over time.

Tools like Helio are valuable for implementing data-informed design. Helio allows you to gather both qualitative and quantitative data about user preferences and behaviors, providing a comprehensive view of user needs. Other useful tools include analytics platforms for tracking metrics and usability testing tools for collecting direct user feedback.

Balancing qualitative and quantitative data is crucial because it provides a full understanding of user behavior. Quantitative data gives you the numbers and trends, while qualitative data provides context and insights into the reasons behind those numbers. This combination helps you create designs that are not only effective but also resonate emotionally with users.

Common pitfalls in data-informed design include over-reliance on data, ignoring qualitative insights, and failing to set clear objectives. To avoid these issues, always balance data with designer intuition, consider the context behind the numbers, and start each project with well-defined goals. Regular iteration and testing are also key to ensuring your designs meet user